As a developer I’ve used a variety of APIs and as a Developer Advocate at Bazaarvoice I help developers use our APIs. As a result I am keenly aware of the importance of good tools and of using the right tool for the right job. The right tool can save you time and frustration. With the recent release of the Converstations API Inspector, an inhouse web app built to help developers use our Conversations API, it seemed like the perfect time to survey tools that make using APIs easier.

The tools

This post is a survey covering several tools for interacting with HTTP based APIs. In it I introduce the tools and briefly explain how to use them. Each one has its advantages and all do some combination of the following:

- Construct and execute HTTP requests

- Make requests other than GET, like POST, PUT, and DELETE

- Define HTTP headers, cookies and body data in the request

- See the response, possibly formatted for easier reading

Firefox and Chrome

Yes a web browser can be a tool for experimenting with APIs, so long as the API request only requires basic GET operations with query string parameters. At our developer portal we embed sample URLs in our documentation were possible to make seeing examples super easy for developers.

Basic GET

http://api.example.com/resource/1?passkey=12345&apiversion=2

Some browsers don’t necessarily present the response in a format easily readable by humans. Firefox users already get nicely formatted XML. To see similarly formatted JSON there is an extension called JSONView. To see the response headers LiveHTTP Headers will do the trick. Chrome also has a version of JSONview and for XML there’s XML Tree. They both offer built in consoles that provide network information like headers and cookies.

CURL

The venerable cURL is possibly the most flexable while at the same time being the least usable. As a command line tool some developers will balk at using it, but cURL’s simplicity and portability (nix, pc, mac) make it an appealing tool. cURL can make just about any request, assuming you can figure out how. These tutorials provide some easy to follow examples and the man page has all the gory details.

I’ll cover a few common usages here.

Basic GET

Note the use of quotes.

$ curl "http://api.example.com/resource/1?passkey=12345&apiversion=2"

Basic POST

Much more useful is making POST requests. The following submits data the same as if a web form were used (default Content-Type: application/x-www-form-urlencoded). Note -d "" is the data sent in the request body.

$ curl -d "key1=some value&key2=some other value" http://api.example.com/resource/1

POST with JSON body

Many APIs expect data formatted in JSON or XML instead of encoded key=value pairs. This cURL command sends JSON in the body by using -H 'Content-Type: application/json' to set the appropriate HTTP header.

$ curl -H 'Content-Type: application/json' -d '{"key": "some value"}' http://api.example.com/resource/1

POST with a file as the body

The previous example can get unwieldy quickly as the size of your request body grows. Instead of adding the data directly to the command line you can instruct cURL to upload a file as the body. This is not the same as a “file upload.” It just tells cURL to use the contents of a file as the request body.

$ curl -H 'Content-Type: application/json' -d @myfile.json http://api.example.com/resource/1

One major drawback of cURL is that the response is displayed unformatted. The next command line tool solves that problem.

HTTPie

HTTPie is a python based command line tool similar to cURL in usage. According to the Github page “Its goal is to make CLI interaction with web services as human-friendly as possible.” This is accomplished with “simple and natural syntax” and “colorized responses.” It supports Linux, Mac OS X and Windows, JSON, uploads and custom headers among other things.

The documentation seems pretty thorough so I’ll just cover the same examples as with cURL above.

Basic GET

$ http "http://api.example.com/resource/1?passkey=12345&apiversion=2"

Basic POST

HTTPie assumes JSON as the default content type. Use --form to indicate Content-Type: application/x-www-form-urlencoded

$ http --form POST api.example.org/resource/1 key1='some value' key2='some other value'

POST with JSON body

The = is for strings and := indicates raw JSON.

$ http POST api.example.com/resource/1 key='some value' parameter2:=2 parameter3:=false parameter4:='["http", "pies"]'

POST with a file as the body

HTTPie looks for a local file to include in the body after the < symbol.

$ http POST api.example.com/resource/1 < resource.json

PostMan Chrome extension

My personal favorite is the PostMan extension for Chrome. In my opinion it hits the sweet spot between functionality and usability by providing most of the HTTP functionality needed for testing APIs via an intuitive GUI. It also offers built in support for several authentication protocols including Oath 1.0. There a few things it can’t do because of restrictions imposed by Chrome, although there is a python based proxy to get around that if necessary.

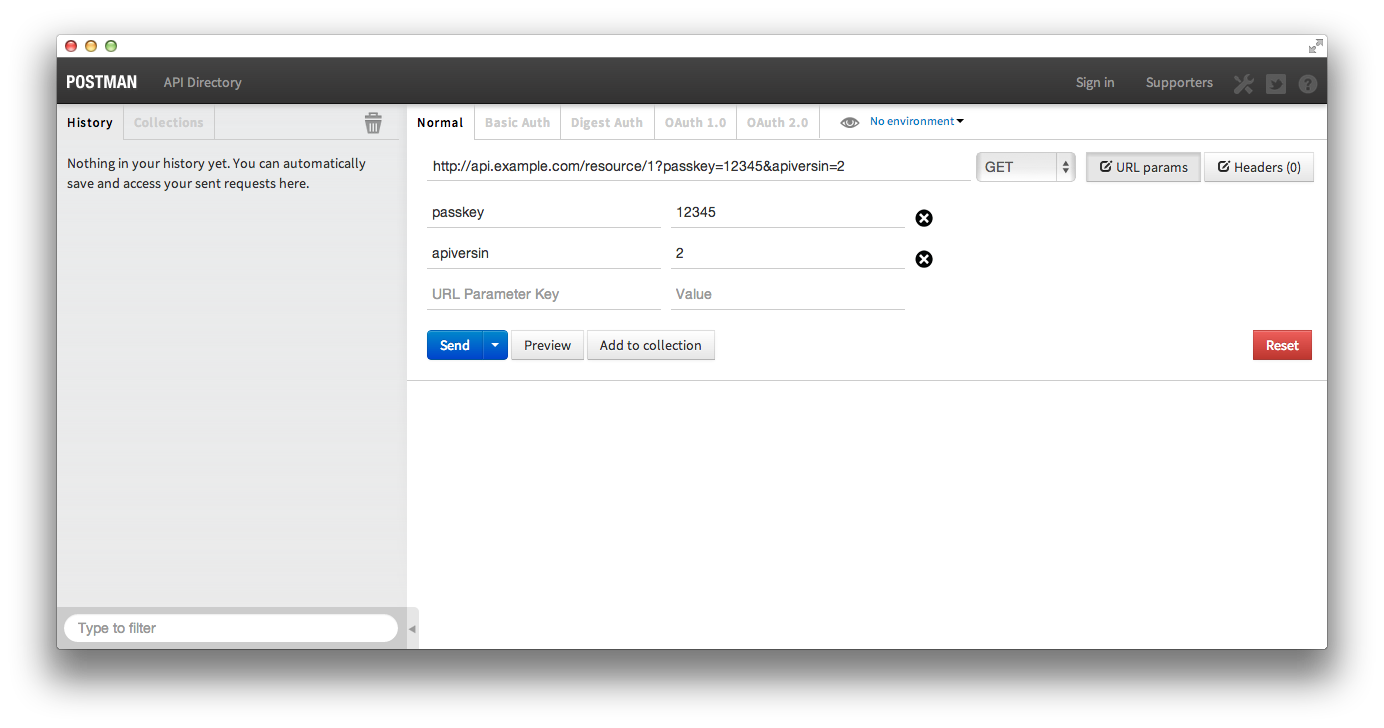

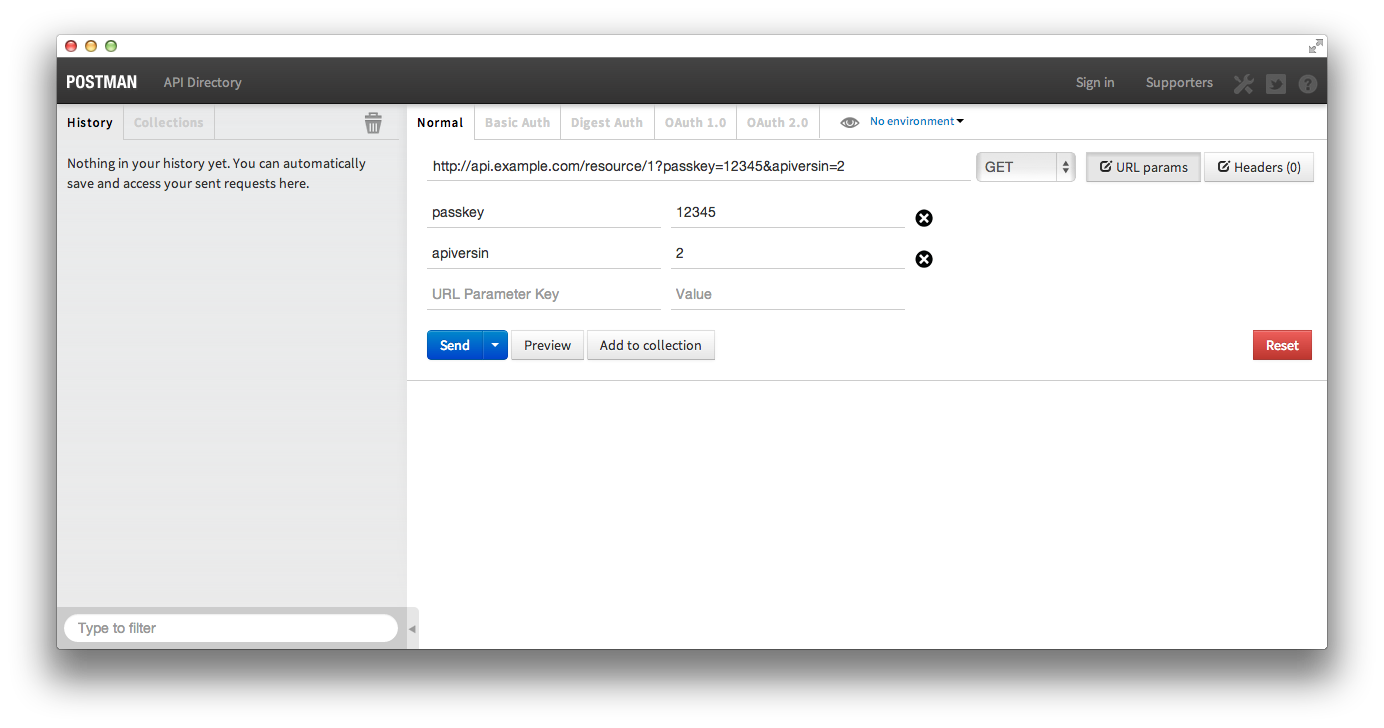

Basic GET

The column on the left stores recent requests so you can redo them with ease. The results of any request will be displayed in the bottom half of the right column.

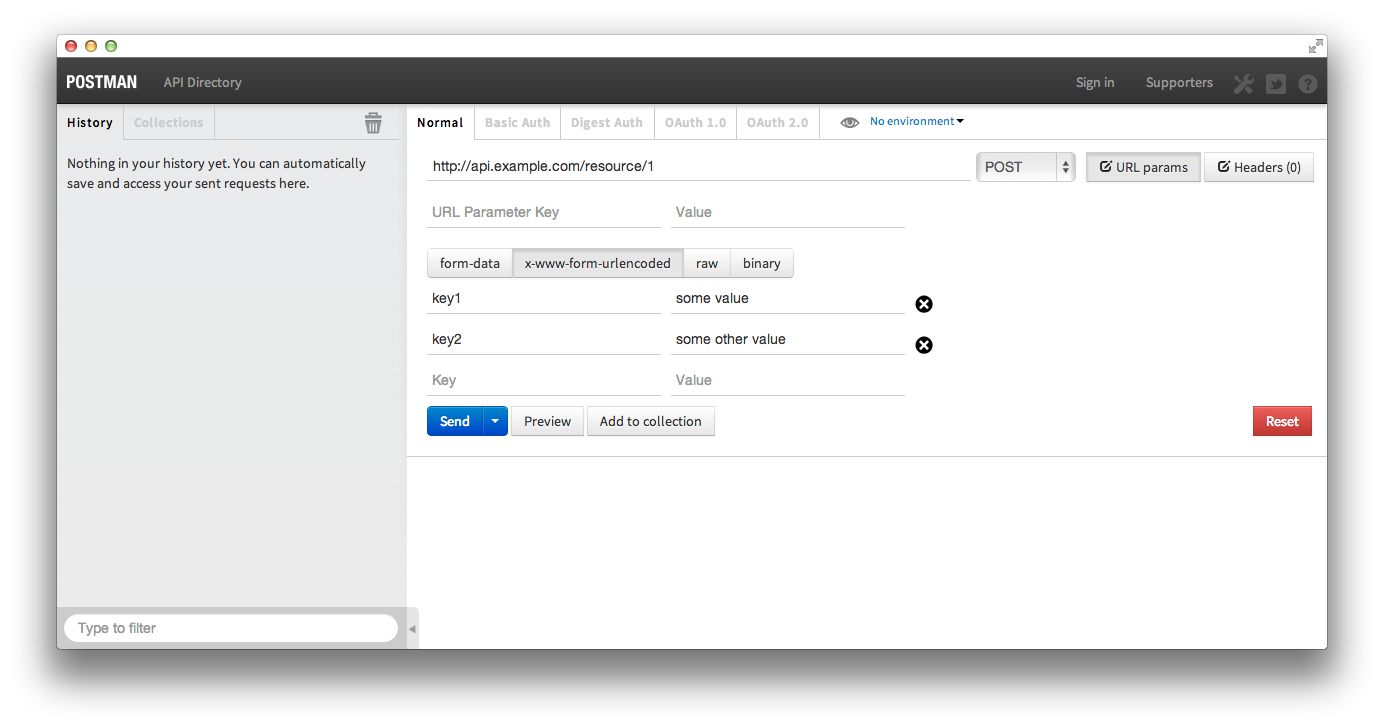

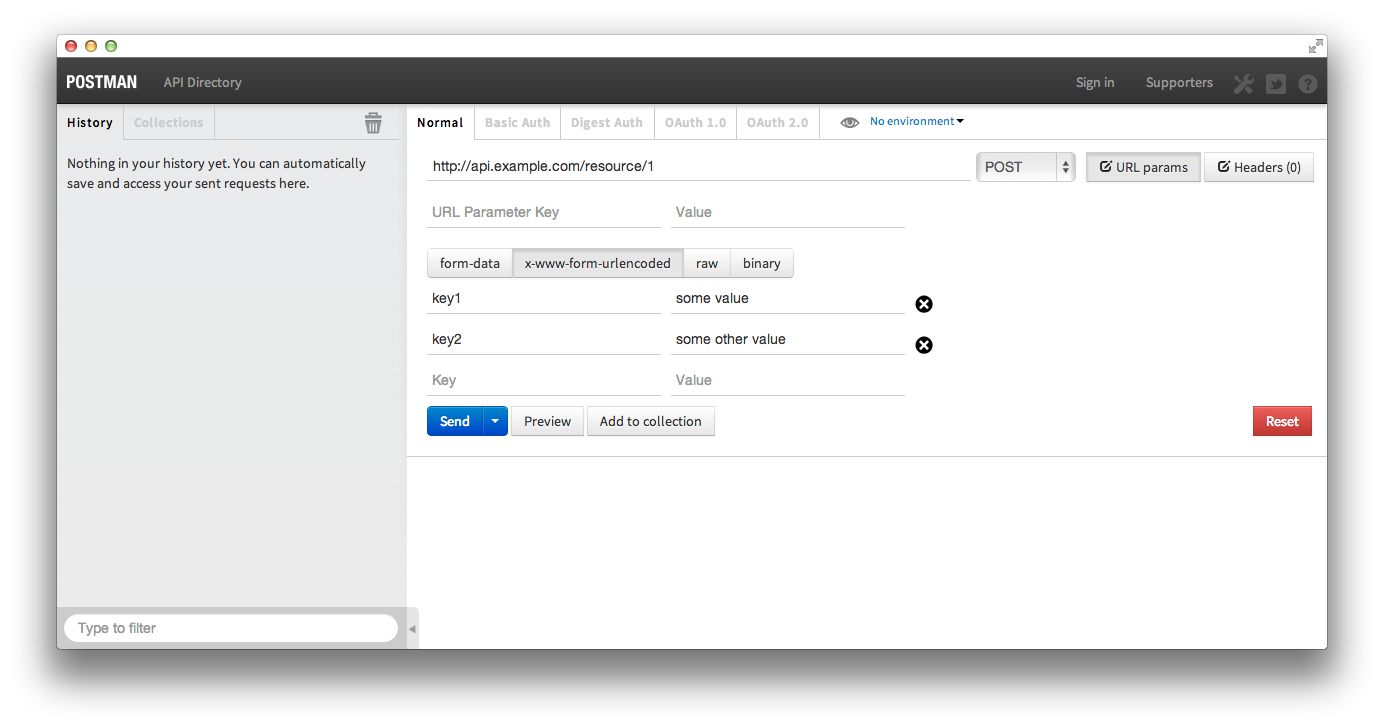

Basic POST

It’s possible to POST files, application/x-www-form-urlencoded, and your own raw data

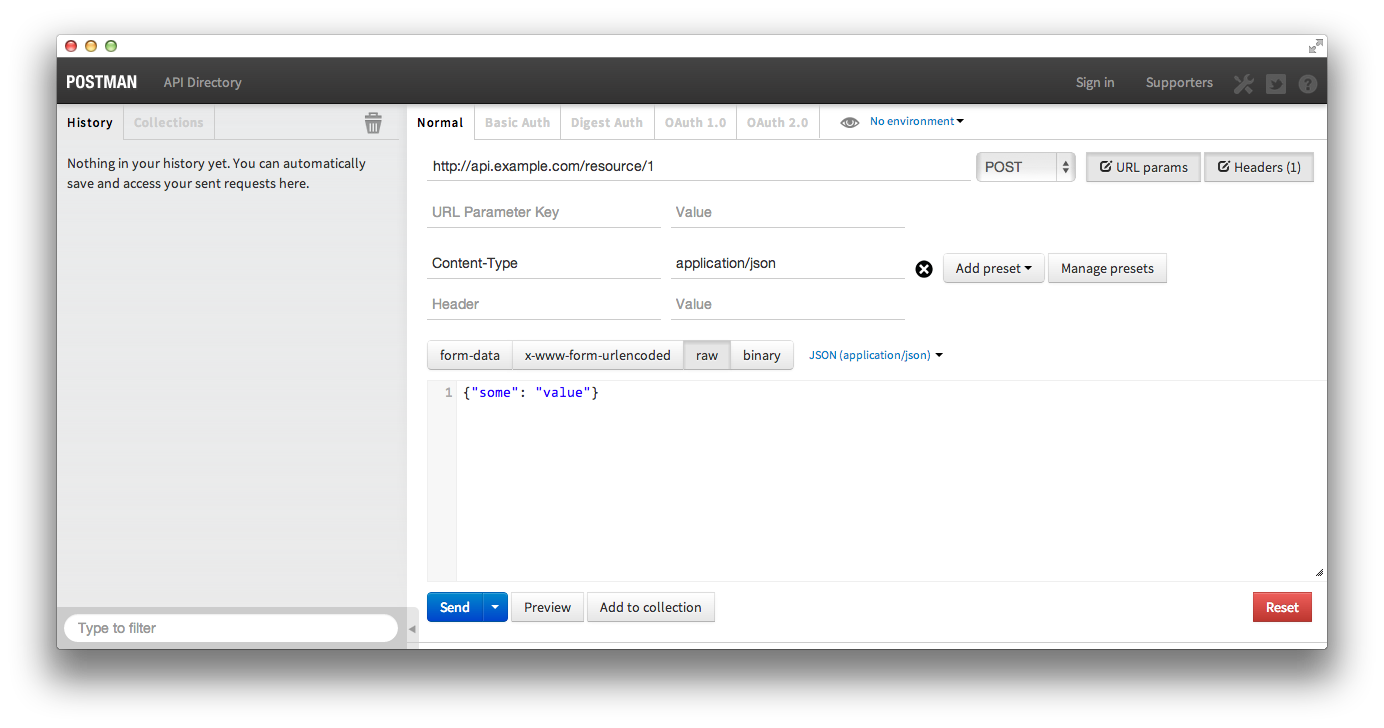

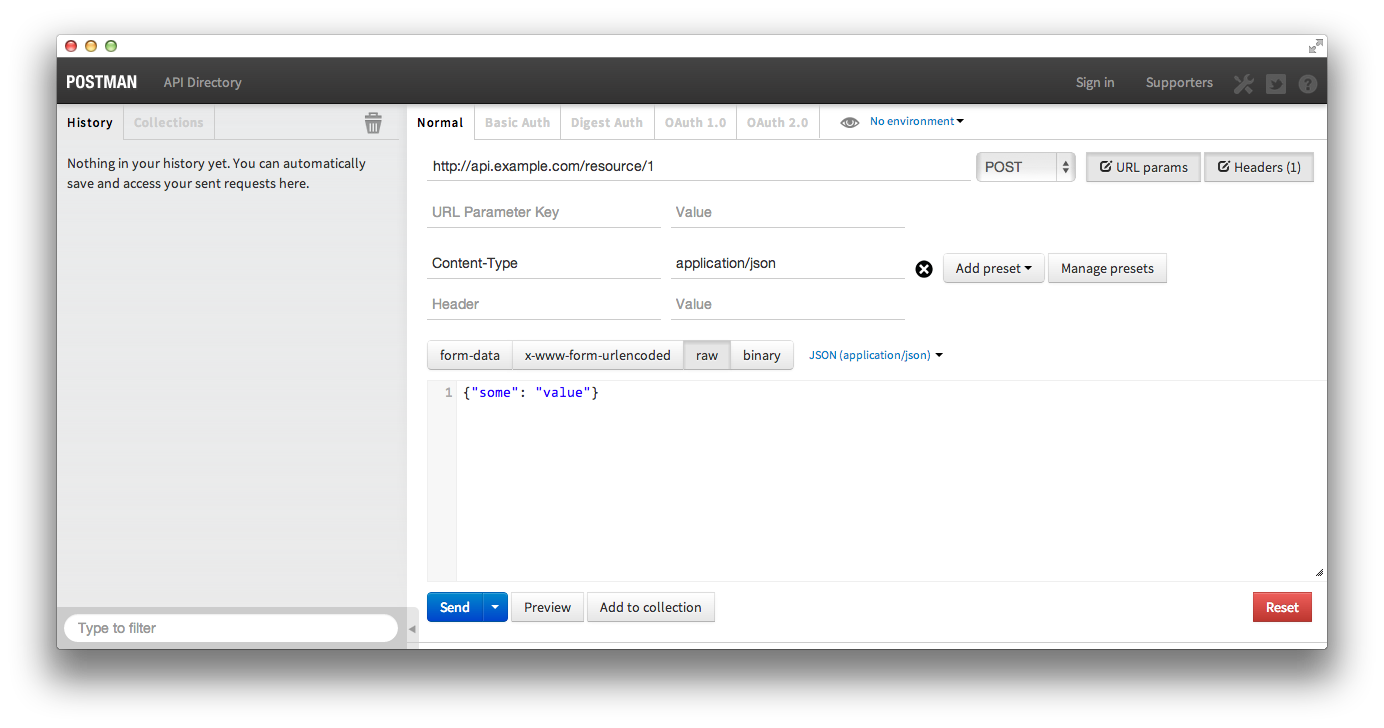

POST with JSON body

Postman doesn’t support loading a BODY from a local file, but doing so isn’t necessary thanks to its easy to use interface.

RunScope.com

Runscope is a little different than the others, but no less useful. It’s a webservice instead of a tool and not open source, although they do offer a free option. It can be used much like the other tools to manually create and execute various HTTP requests, but that is not what makes it so useful.

Runscope acts a proxy for API requests. Requests are made to Runscope, which passes them on to the API provider and then passes the responses back. In the process Runscope logs the requests and responses. At that point, to use their words, “you can view the request/response details, share requests with others, edit and retry requests from the web.”

Below is a quick example of what a Runscopeified request looks like. Read their official documentation to learn more.

before: $ curl "http://api.example.com/resource/1?passkey=12345&apiversion=2"

after: $ curl "http://api-example-com-bucket_key.runscope.net/resource/1?passkey=12345&apiversion=2"

Conclusion

If you’re an API consumer you should use some or all of these tools. When I’m helping developers troubleshoot their Bazaarvoice API requests I use the browser when I can get away with it and switch to PostMan when things start to get hairy. There are other tools, I know because I omitted some of them. Feel free to mention your favorite in the comments.

(A version of this post was previously published at the author’s personal blog)