Hi everyone! This is my first post to the Bazaarvoice Developer blog and I’d like to take this opportunity to shed some light on some of the tools Bazaarvoice Labs has recently found very useful in creating the pilots and prototypes that ultimately morph into new products and features on the Bazaarvoice platform. Before I talk about our toolset though, I’d like to give you a quick rundown on what Bazaarvoice Labs is, our process and why it’s important for us to be flexible in our toolset choices.

Bazaarvoice Labs is the new product research and development group at Bazaarvoice with emphasis on the new and research. We are actually a team of engineers that report to our Product Management team (rather than through the engineering group) that help our Product Managers realize their wildest (and potentially most game-changing) ideas. Every quarter we evaluate and prioritize new ideas proposed by our Product Management team, customers and Bazaarvoicers around the company in order to research and create prototypes. The ideas we prioritize highest are those that come with big hairy assumptions but could change our business if they work. By building prototypes we’re able to suss out where the trouble might lie if we were to introduce the new product or feature to our entire customer base. We currently have over one thousand of the world’s biggest brands hosting their user-generated content in our platform and many large services organization to boot. The introduction of even a small new feature can have very large consequences to our organization. So on the risky stuff, we like to know where the gotchas lie. Some of the products spawned out of this process include BrandAnswers and Ratings and Reviews for Facebook (part of our SocialConnect Suite).

In order to build a prototype, we assign an engineer to work directly with a Product Manager or Product Designer. These two work together in an agile manner (agile with a little-a, not a capital A) in order to create a tangible prototype that demonstrates the Product Manager’s idea unencumbered by writing lots of requirements or unnecessary process. It’s this one-to-one relationship that makes this process hum, gives the creative process a kick in the pants and really lets these ideas properly gestate. Once more people get involved in the project, due to network effect, managing the project gets exponentially harder with each person you add and the need for process increases as a way to mitigate risk. By imposing a one-to-one structure for our prototyping teams we strip away any unnecessary obstacles to creativity and give real creative ownership to our Product Managers, Designers and Engineers. In a way, these teams become entrepreneurial cofounders as they attempt to prove their ideas. Additionally, by artificially constraining the initial project team to one engineer, the team is focused on building out the Minimally Viable Product needed to prove their assumptions and build a business case before further investment is required. Another nice side effect of this style of working is that it allows the engineer working on the project to choose their own tool-chain for each new project. Since they’re working alone on a project, there’s no need to constrain the tool choices to lowest common denominator of what every team member might already know or be familiar with. Of course, it’s up to the engineer’s discretion to reuse code or tools that may already be in use at Bazaarvoice but that choice ultimately lies with the engineer and the engineer knows to optimize around speed of creation vs. other organizational considerations. One nice side effect of an engineer being able to choose a new tool-chain with every project is that, in addition to proving business and product ideas, emerging technologies can be realistically evaluated and, where appropriate, integrated into our core engineering stack (this happened with requireJS which has become an integral part of how we deploy Javascript on our customer’s sites).

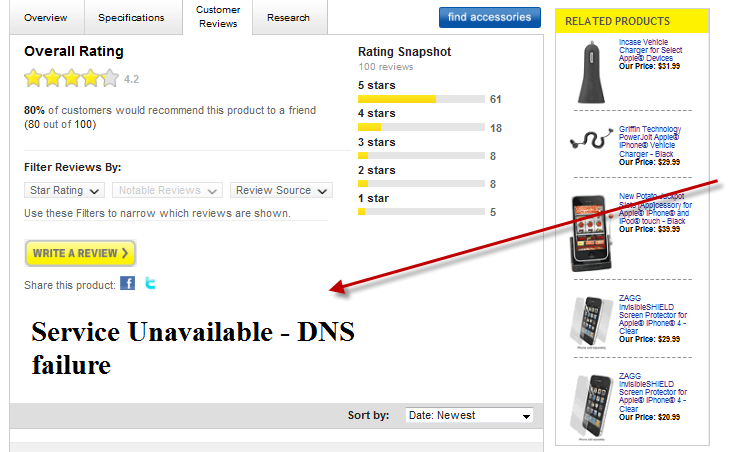

Sometimes simply building a prototype may not answer the questions that we have around the viability of the product and we need to take further steps to answer the questions we might have. For example, we needed to answer the following question for Ratings and Reviews for Facebook: “Will people be willing to read and write Product reviews inside a Facebook app?” In this case, a prototype isn’t enough. We needed to progress to the next phase of the process and actually pilot the application we had built with a couple of customers. For this reason, the “prototypes” we build need to be more robust than what you might initially think. Yes, we’re building concept cars in Labs but our concept cars actually need to run. We generally launch these pilots with three to five customers and generally won’t internationalize them. These restrictions keep us agile and help make sure we don’t have to build too much customizability into the pilots. Even though these pilots will only be launched with a handful of customers, some of these will be placed in some very high profile, high traffic places (some getting over 100,000 hits per day). Examples of running pilots right now include Nikon’s Ask and Answer for Facebook, Travelocity’s Social Connect Discovery Pilot (see the “Traveler Reviews” link) and TurboTax’s People Like You review search tool. Of course, when we launch pilots we track the data and rapidly move to improve the product and build out a suitable business case for productization. In the pilot phase the engineers are free to launch new code whenever they choose and must play the roles of UX, server side and operations engineer. By being chief cook and bottle washer on these projects, it frees the owning engineer (there’s still only one per project) to push releases as frequently as necessary to build that business case and observe how changes affect the project’s KPIs.

So what tools do we use to build software in Labs? Let’s review the two phases of our projects momentarily: The tools we chose to build with in Bazaarvoice Labs must support two phases of a project:

- Prototyping: When the engineer needs to build a usable, tangible artifact targeted for internal consumption and demonstrations for clients.

- Pilots: Where we launch our new ideas with a few select clients and measure results to build a business case. Pilots must be stable and scale yet the engineer still has to rapidly iterate on the feature set.

Because our development cycles are so short at Bazaarvoice, projects must also be able to transition between the prototype and pilot phase seamlessly. The tools we select must therefore support the requirements mentioned above. Generally we can divide our tool-chain into a few broad categories:

- Operational Tools: Tools that help us keep things up and running

- Server-side Application Development Environments: Application containers, full-stack and micro frameworks. Tools to build web apps with.

- Data Storage and Management: SQL, noSQL and whatever else you need

- Client-side Tools: Because there’s a lot you can do with just a browser nowadays

- Measurement Tools: Without the data to back up our hypotheses, there’s no science

In my next blog post, I’m going to step through each of these categories and talk about a couple of tools that we use and the projects that we’ve used them in. This will not be an exhaustive list since we’re always evaluating new tools but it should give you some insight into the how and why we pick the tools that we do.