One of the many challenges of software testing has always been cross-browser testing. Despite the web’s overall move to more standards compliant browser platforms, we still struggle with the fact that sometimes certain CSS values or certain JavaScript operations don’t translate well in some browsers (cough, cough IE 8).

In this post, I’m going to show how the Curations team has upgraded their existing automation tools to allow for us to automate spot checking the visual display of the Curations front end across multiple browsers in order to save us time while helping to build a better product for our clients.

The Problem: How to save time and test all the things

The Curations front end is a highly configurable product that allows our clients to implement the display of moderated UGC made available through the API from a Curations instance.

This flexibility combined with BV’s browser support guidelines means there are a very large number ways Curations content can be rendered on the web.

Initially, rather than attempt to test ‘all the things’, we’ve codified a set of possible configurations that represent general usage patterns of how Curations is implemented. Functionally, we can test that content can be retrieved and displayed however, when it comes whether that the end result has the right look-n-feel in Chrome, Firefox and other browsers, our testing of this is largely manual (and time consuming).

How can we better automate this process without sacrificing consistency or stability in testing?

Our solution: Sikuli API

Sikuli is an open-source Java-based application and API that allows users to automate web, mobile and OS applications across multiple platforms using image recognition. It’s platform based and not browser specific, so it enables us to circumvent limitations with screen capture and compare features in other automation tools like Webdriver.

Imagine writing a test script that starts with clicking the home button within an iOS simulator, simply by providing the script a .png of the home button itself. That’s what Sikuli can do.

You can read more about Sikuli here. You can check out their project here on github.

Installation:

Sikuli provides two different products for your automation needs – their stand-alone scripting engine and their API. For our purposes, we’re interested in the Sikuli API with the goal to implement it within our existing Saladhands test framework, which uses both Webdriver and Cucumber.

Assuming you have Java 1.6 or greater installed on your workstation, from Sikuli.org’s download page, follow the link to their standalone setup JAR

http://www.sikuli.org/download.html

Download the JAR file and place it in your local workstation’s home directory, then open it.

Here, you’ll be prompted by the installer to select an installation type. Select option 3 if wish to use Sikuli in your Java or Jython project as well as have access to its command line options. Select option 4 if you only plan on using Sikuli within the scope of your Java or Jython project.

Once the installation is complete, you should have a sikuli.jar file in your working directory. You will want to add this to your collection of external JARs for your installed JRE.

For example, if you’re using Eclipse, go to Preferences > Java > Installed JREs, select your JRE version, click Edit and add Sikuli.jar to the collection.

Alternately, if you are using Maven to build your project, you can add Sikuli’s API to your project by adding the following to your POM.XML file:

<dependency>

<groupId>org.sikuli</groupId>

<artifactId>sikuli-api</artifactId>

<version>1.2.0</version>

</dependency>

Clean then build your project and now you’re ready to roll.

Implementation:

Ultimately, we wanted a method we could control using Cucumber that allows us to articulate a web application using Webdriver that could take a screen shot of a web application (in this case, an instance of Curations) and compare it to a static screen shot of specific web elements (e.g. Ratings and Review stars within the Curations display).

This test method would then make an assumption that either we could find a match to the static screen element within the live web application or have TestNG throw an exception (test failure) if no match could be found.

First, now that we have the ability to use Sikuli, we created a new helper class that instantiates an object from their API so we can compare screen output.

import org.sikuli.api.*;

import java.io.IOException;

import java.io.File;

/**

* Created by gary.spillman on 4/9/15.

*/

public class SikuliHelper {

public boolean screenMatch(String targetPath) {

new ImageTarget(new File(targetPath));

Once we import the Sikuli API, we create a simple class with a single class method. In this case, screenMatch is going to accept a path within the Java project relative to a static image we are going to compare against the live browser window. True or false will be returned depending on if we have a match or not.

//Sets the screen region Sikuli will try to match to full screen

ScreenRegion fullScreen = new DesktopScreenRegion();

//Set your taret to compare from

Target target = new ImageTarget(new File(targetPath));

The main object type Sikuli wants to handle everything with is ScreenRegion. In this case, we are instantiating a new screen region relative to the entire desktop screen area of whatever OS our project will run on. Without passing any arguments to DesktopScreenRegion(), we will be defining the region’s dimension as the entire viewable area of our screen.

double fuzzPercent = .9;

try {

fuzzPercent = Double.parseDouble(PropertyLoader.loadProperty("fuzz.factor"));

}

catch (IOException e) {

e.printStackTrace();

}

new ImageTarget(new File(targetPath));

Sikuli allows you to define a fuzzing factor (if you’ve ever used ImageMagick, this should be a familiar concept). Essentially, rather than defining a 1:1 exact match, you can define a minimal acceptable percentage you wish your screen comparison to match. For Sikuli, you can define this within a range from 0.1 to 1 (ie 10% match up to 100% match).

Here we are defining a default minimum match (or fuzz factor) of 90%. Additionally, we load in from a set of properties in Saladhand’s test.properties file a value which, if present can override the default 90% match – should we wish to increase or decrease the severity of test criteria.

target.setMinScore(fuzzPercent);

new ImageTarget(new File(targetPath));

Now that we know what fuzzing percentage we want to test with, we use target’s setMinScore method to set that property.

ScreenRegion found = fullScreen.find(target);

//According to code examples, if the image isn't found, the screen region is undefined

//So... if it remains null at this point, we're assuming there's no match.

if(found == null) {

return false;

}

else {

return true;

}

new ImageTarget(new File(targetPath));

This is where the magic happens. We create a new screen region called found. We then define that using fullScreen’s find method, providing the path to the image file we will use as comparison (target).

What happens here is that Sikuli will take the provided image (target) and attempt to locate any instance within the current visible screen that matches target, within the lower bound of the fuzzing percentage we set and up to a full, 100% match.

The find method either returns a new screen region object, or returns nothing. Thus, if we are unable to find a match to the file relative to target, found will remain undefined (null). So in this case, we simply return false if found is null (no match) or true of found is assigned a new screen region (we had a match).

Putting it all together:

To completely incorporate this behavior into our test framework, we write a simple cucumber step definition that allows us to call our Sikuli helper method, and provide a local image file as an argument for which to compare it against the current, active screen.

Here’s what the cucumber step looks like:

public class ScreenShotSteps {

SikuliHelper sk = new SikuliHelper();

//Given the image "X" can be found on the screen

@Given("^the image \"([^\"]*)\" can be found on the screen$")

public void the_image_can_be_found_on_the_screen(String arg1) {

String screenShotDir=null;

try {

screenShotDir = PropertyLoader.loadProperty("screenshot.path").toString();

}

catch (IOException e) {

e.printStackTrace();

}

Assert.assertTrue(sk.screenMatch(screenShotDir + arg1));

}

new ImageTarget(new File(targetPath));

}

We’re referring to the image file via regex. The step definition makes an assertion using TestNG that the value returned from our instance of SikuliHelper’s screen match method is true (Success!!!). If not, TestNG throws an exception and our test will be marked as having failed.

Finally, since we already have cucumber steps that let us invoke and direct Webdriver to a live site, we can write a test that looks like the following:

Feature: Screen Shot Test

As a QA tester

I want to do screen compares

So I can be a boss ass QA tester

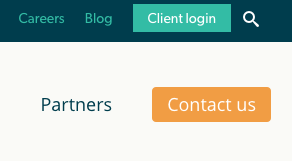

Scenario: Find the nav element on BV's home page

Given I visit "http://www.bazaarvoice.com"

Then the image "screentest1.png" can be found on the screen

new ImageTarget(new File(targetPath));

In this case, the image we are attempting to find is a portion of the nav element on BV’s home page:

Considerations:

This is not a full-stop solution to cross browser UI testing. Instead, we want to use Sikuli and tools like it to reduce overall manual testing as much as possible (as reasonably as possible) by giving the option to pre-warn product development teams of UI discrepancies. This can help us make better decisions on how to organize and allocate testing resources – manual and otherwise.

There are caveats to using Sikuli. The most explicit caveat is that tests designed with it cannot run heedlessly – the test tool requires a real, actual screen to capture and manipulate.

Obviously, the other possible drawback is the required maintenance of local image files you will need to check into your automation project as test artifacts. How deep you will be able to go with this type of testing may be tempered by how large of a file collection you will be able to reasonably maintain or deploy.

Despite that, Sikuli seems to have a large number of powerful features, not limited to being able to provide some level of mobile device testing. Check out the project repository and documentation to see how you might be able to incorporate similar automation code into your project today.